Article

Knowledge Graphs and Large Language Models: the perfect match!

Out-of-the-box solutions for RAG systems (Retrieval Augmented Generation) are becoming increasingly widespread. They promise to make a company’s knowledge accessible from documents such as PDFs or Word files using Large Language Models (LLMs) in the form of a chat. In this context, the question inevitably arises: What role do knowledge graphs play in this scenario – and what added value can they offer?

In other words: If I can make all my texts available via chat, why should I invest money and time resources in structuring my knowledge?

From the perspective of a company that has already invested these resources, i.e. has a knowledge graph available, the question arises as to whether an LLM can still offer added value. This is especially true as LLMs are known for their hallucinating and false information.

Structured data for the Large Language Model (LLM)

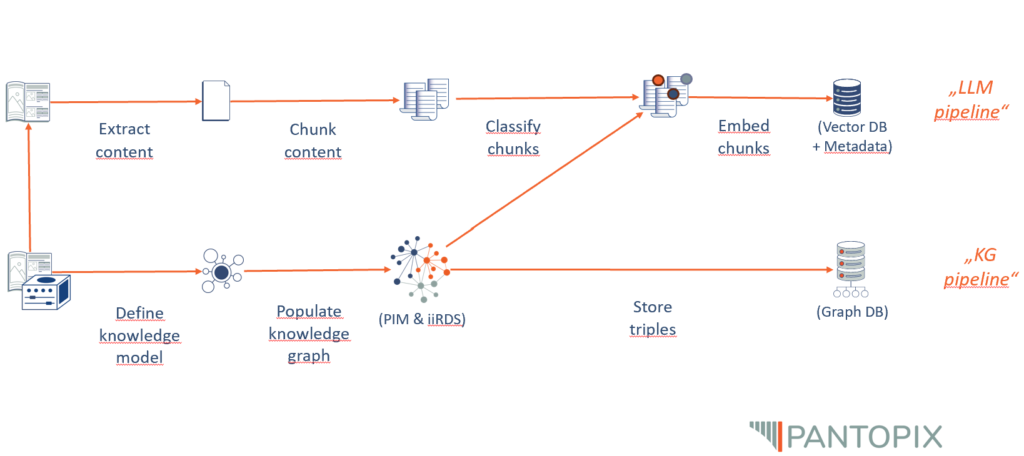

In order to make text files such as PDFs available for an LLM-driven chat, the text files must be broken down into meaningful sections (chunking) and each text section must be converted into vectors (embeddings) and saved (vector database). Based on this, when a request is sent to the chat, the request itself is vectorised and the semantically most obvious text section is returned to the LLM using mathematical-geometric functions and reformulated by the LLM into the final answer.

A key challenge here is determining the appropriate granularity for selecting meaningful text sections—whether at the document, chapter, page, paragraph, or sentence level—and defining the threshold for similarity between query and source vectors to ensure content relevance. Enhancing text sections with metadata (such as topic, author, text type, or position) can improve their representation in the semantic space by refining embeddings. However, these methods require customization to fit the specific dataset and are not readily available as out-of-the-box solutions.

This is precisely the strength of the Knowledge Graphs. In the graph, the overall context of an individual piece of information is created from the outset and is accessible in any depth and breadth via simple queries (SPARQL queries). For a chat system, this means that once the user query has been assigned to a topic, the answer can be assembled from information modules that fit the context without having to rely on the structure of the semantic vector space.

Furthermore, the information in a knowledge graph is easily accessible, dynamically and modularly customisable, up-to-date and curated. Therefore, the knowledge encoded in the knowledge graph can not only contribute to the context expansion of the LLM, but can also be used to validate its output. Since, from a syntactic point of view, the information in the knowledge graph is stored in the form of subject-predicate-object sentences, the statements of the LLM can be checked and, if necessary, corrected after a linguistic analysis of the LLM response.

Why not just Knowledge Graphs?

If knowledge graphs have this potential, why do I need an LLM? The answer lies in the accessibility of the knowledge graph for users in two ways. On the one hand, querying the knowledge graph is technically simple, but it still requires knowledge of a query language such as SPARQL or similar and authorisation to access the knowledge graph directly. Neither of these is normally the case. And secondly, the answer to such a query has the above-mentioned triple structure of subject-predicate-object sentences, which can be further obscured by technical necessities such as namespaces and output formats.

Ultimately, most users clearly prefer a natural language interaction with their company’s knowledge, which is more valuable for the company. The task of the LLM is also its greatest strength: to fulfil the interface between humans and computer systems in the language of humans, not in the language of the computer!

LLM for expanding the Knowledge Graph

Conversely, not only can the knowledge graph raise the LLM to a higher level, but the language model can also contribute to the further development of the knowledge graph. This works by allowing LLMs to work as semantic annotators, for example. When I ask an LLM for the content metadata of the text to be read here, it responds with a list of 24 keywords beginning with ‘LLM’, ‘Knowledge Graph’, ‘RAG system’, ‘hallucination’, ‘value proposition’. In this way, texts can be classified, analysed and placed in the knowledge graph. Whether you want to use human-in-the-loop approaches here and the extent to which the process can be automated depends on the respective use case and the complexity of the underlying metadata model.

In a nutshell: Advantages of Knowledge Graphs as support for LLMs

To summarise once again: Knowledge graphs are necessary when information needs to be represented in complex, networked structures that can be managed over the long term. They enable efficient processing, linking and querying of data that goes far beyond the capabilities of a language model that has only been trained on text documents.

All the advantages of knowledge graphs as support for LLMs at a glance:

- Complex links and relationships: Knowledge graphs create logical links between entities and enable deeper analyses across distributed documents.

- Context sensitivity and meaning: They explicitly store and link context so that terms and concepts are understood in their respective meaning.

- Structured and unstructured data: Knowledge graphs integrate different data sources and enable a standardised, searchable knowledge base.

- Consistency and knowledge management: They allow targeted updates and ensure that knowledge remains structured, consistent and reusable.

- Scalability and longevity: They grow with the amount of data, improve continuously and enable long-term, sustainable knowledge management.

- Reduction of hallucinations: Knowledge Graphs ensure that AI models rely on verifiable and logically connected information, minimizing

speculative or incorrect responses (hallucinations).

Subscribe to PANTOPIX’ newsletter.

We will be happy to inform you regularly about new articles.

Semantic layers of the Product Information Management Systems (PIM)

Companies rely on Product Information Management (PIM) systems to create, manage, and distribute product information across various channels. We can offer PIM systems software solutions for our industrial clients.

Topic orientation in technical communication

The switch from a document-based to a topic-oriented approach in technical communication is bringing about far-reaching changes that offer numerous advantages.

But what exactly is behind this concept of topic orientation and why is it so useful for technical communication?

PIM Systems based on Knowledge Graphs

Industries rely on Product Information Management (PIM) systems to create, manage and distribute product information across multiple channels.

We can address the challenges that arise by developing PIM systems based on knowledge graphs.

Contact us

Jonathan Schrempp

Head of Knowledge Engineering

- jonathan.schrempp@pantopix.com